Storage for DBAs: Having now spent over a year in the storage industry, I’ve decided it’s time to call out an industry-wide obsession that I previously wasn’t aware of: everyone in storage is obsessed with IOPS (the performance metric I/O Operations Per Second). Take a minute to perform a web search for “flash iops” and you’ll see countless headlines from vendors that have broken new IOPS records – and yes, these days my own employer is often one of them. You’d be forgiven for thinking that, in storage, IOPS was the most important thing ever.

I’m here to tell you that it isn’t. At least, not if databases are your game.

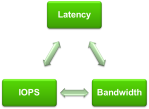

![storage-characteristics]() Fundamental Characteristics of Storage

Fundamental Characteristics of Storage

In a previous article I described the three fundamental characteristics of storage: latency, IOPS and bandwidth (or throughput). I even drew a simple, boxy diagram which, despite being one of the least-inspiring pieces of artwork ever created, serves me well enough to warrant its inclusion again here. These three properties are related – when one changes, the others change. With that in mind, here’s lesson #1:

High numbers of IOPS are useless unless they are delivered at low latency.

It’s all very well saying you can supply 1 million, 2 million, 4 million IOPS but if the latency sucks it’s not going to be of much value in the real world. Flash is great for delivering higher numbers of IOPS than disk, particularly for random I/Os (as I’ve written about previously), but ultimately the delay introduced by high latency is going to make real-world workloads unusable.

And there’s another, oft-hidden, problem that many flash vendors face: unpredictable latency. This is particularly the case during write-heavy workloads where garbage collection cannot always keep up with load, resulting in the infamous “write cliff” (more technically described as bandwidth degradation – see figure 7 of this paper). Maybe we should revise that previous line to be lesson #1.5;

High numbers of IOPS are useless unless they are delivered at predictable low latency.

But what about when we deal with volumes of data? If your requirement is to process vast amounts of information, do IOPS then become more important? Not really, because this is a bandwidth challenge – you need to design and build a system to suit your bandwidth requirements. How many GB/sec do you need? What can the storage subsystem deliver and how fast can you process it? Unlike bandwidth, an IOPS measurement does not contain the critical component of block size, so information is missing. And if you have the bandwidth figures, there is little additional value in knowing the IOPS, is there? Cue lesson #2:

Bandwidth figures are more useful for describing data volumes than IOPS

So what good are IOPS figures? And why does the storage industry talk about them all the time? Personally I think it’s a hang-up from the days of disk, when IOPS were such a limiting factor… and partly a marketing thing, because multi-million results sound impressive. Who knows? I’m more interested in what we should be asking about than what we shouldn’t.

So what does matter?

Latency Is King

Forget everything else. Latency is the critical factor because this is what injects delay into your system. Latency means lost time; time that could have been spent busily producing results, but is instead spent waiting for I/O resources.

Forget everything else. Latency is the critical factor because this is what injects delay into your system. Latency means lost time; time that could have been spent busily producing results, but is instead spent waiting for I/O resources.

Forget IOPS. The whole point of a flash array is that IOPS effectively become an unlimited resource. Sure, there is always a real limit – but it’s so high that it’s no longer necessary to worry about it.

Bandwidth still matters, particularly when you are doing something which requires volume, such as analytics or data warehousing. But bandwidth is a question of plumbing – designing a solution with enough capability to deliver throughout the stack: storage, fabric, HBAs, network, processor… build it and the data will come.

Latency is the “application stealth tax”, extending the elapsed times of individual tasks and processes until everything takes slightly longer. Add up all those delays and you have a significant problem. This is why, when you consider buying flash, you need to test the latency – and not just at the storage level, but end-to-end via the application (I’ll talk about this more in a following post).

“But I Don’t Need That Many IOPS…”

This is classic misunderstanding, often the result of confusion brought about by FUD from storage vendors who cannot deliver at the higher end of the market. To repeat my previous statement, with a good flash system IOPS will effectively become an unlimited resource. This does not mean that it’s overkill for your needs. There is no point in spending more money on a solution than is necessary, but IOPS is not the indicator you should use to determine this – decisions like that should rely entirely on business requirements. I have yet to ever see a business requirement that related to IOPS (emphasis on business rather than technical).

Business requirements tend to be along the lines of needing to supply trading reports faster, or reduce the time spent by call centre operatives waiting for their CRM screens to refresh. These almost always translate back into latency requirements. After all, the key to solving any performance issue is always to follow the time and find out where it is being spent. Have you noticed that latency is the only one of our three fundamental characteristics which is expressed solely in units of time?

Don’t get distracted by IOPS… it’s all about latency.

Filed under: Blog, Database, Flash, Storage, Storage for DBAs Tagged: database, flash memory, oracle, Storage for DBAs